In the early days of the blockchain, engineers and programmers dreamed of reshaping the world with blockchain. However, their big dreams quickly come back to the real world when they recognized the bottlenecks of blockchain: high latency, and lack of capabilities for storage and computation.

Many developers since then have dived into the area of the public chain, which is the infrastructure of the blockchain. They aim to ramp up the transaction processing capacity of Bitcoin and Ethereum and break through the limitations of storage and computation, pushing the boundaries of blockchain infrastructure.

The first evolution — from bitcoin to Ethereum

In 2008, Satoshi Nakamoto, a developer whose identity is still unknown, wrote the groundbreaking Bitcoin whitepaper “Bitcoin: A Peer-to-Peer Electronic Cash System,” which was published to a cryptography mailing list. The paper proposed an ingenious system wherein developers can create a safe and distributed database.

Bitcoin is not controlled by a central authority to verify its data authenticity. Instead, it rewards people who maintain the distributed ledger with BTC, a type of cryptocurrency.

In its early days, Bitcoin’s supporters were encryption experts staying in laboratories. Then programmers started to jump on the bandwagon. They wanted to explore the blockchain world with coding.

In 2013, Vitalik Buterin, a young Canadian talented developer, created Ethereum, the world’s first public chain that supports the smart contract. Not only can programmers develop applications on Ethereum, more importantly, they can also trade anything — votes, domain names, financial transactions.

One of Ethereum’s standout innovations is initial coin offering (ICO), a type of crowdsourcing in which a quantity of the crowdfunded cryptocurrency is sold to investors in the form of “tokens,” in exchange for legal tender or other cryptocurrencies such as bitcoin or ether.

The capital brought by ICO promoted the prosperity of Ethereum and its entire application ecosystem. At the same time, Ethereum attracted more developers to spawn innovative projects and raise money.

From 2008 to 2013, public chains went through its first evolution. If bitcoin was the first-generation of the public chain, Ethereum was then the second-generation. In the next five years after 2013, most developers were attached to Ethereum.

However, blockchain is still in a very early stage. In 2017, the rise of ICO spurred the crazy rides of cryptocurrencies and drove more people to flood into the blockchain world. However, bitcoin and Ethereum also began to show their limitations.

Bitcoin is mainly used for financial transfer, so it has no capabilities for storage and computation. Ethereum can address a part of the limitations somehow, but its amount of computation is still in short — only three million gas per block. Ethereum can enable just a tiny amount of computation. As a result, the current smart contracts only perform elementary functions.

While investors are heavily investing in blockchain startups, technical bottlenecks are preventing these projects from reaching their full potential in industries beyond money transfer and information tracking of logistics. That is being said; the blockchain world is lack of real infrastructure. Public chain still needs further development.

The second upgrade: TPS

The increasingly urgent problem of blockchain is the slow transaction.

To complete a transaction in a blockchain system, miners should record the transaction data in blocks. When blocks are packed to capacity by transaction information, many transactions can only be processed after miners generate new blocks. As a result, one transaction can take more than 10 minutes.

Therefore, many public chains promise to improve the transaction speed and increase the throughput, such as Lightning Network, Thunder Network, and QuarkChain.

It is not easy, however. There is a triangle paradox that challenges developers to increase transaction speed effectively. The given three points of the triangle are respectively “security,” “decentralization,” and “scalability,” and you cannot obtain all of them at the same time.

For example, if you want more security or privacy, you need more data for each transaction, which might slow down the transaction speed and require bigger storage space. Vice versa. You need to compromise decentralization for the sake of security and privacy.

Developers are trying to find a balancing-point of the triangle.

For example, Lightning Network, a “second layer” payment protocol that operates on top of a blockchain (most commonly Bitcoin), and Thunder Network, a payment channel for Ethereum, adopt a technique called “off-chain” transaction — users can exchange their transaction signatures in private instead of processing the transaction in the blockchain.

If you buy two coffees on Ethereum, you need to record them twice in the blockchain and pay two transaction fees. However, Lightning Network and Thunder Network are designed to record the two transactions separately but the total price of the two coffees in the blockchain. It saves transaction fees and increases the transaction speed.

Meanwhile, sharding is another smart approach widely adopted in the blockchain. Even Buterin is actively promoting this technique. It allows developers to split the entire state of the network into a couple of partitions called shards that contain their independent piece of state and transaction history.

QuarkChain, a high-throughput blockchain that aims to achieve millions of transactions per second, drew much attention because of sharding. The network is trying to overcome the conflict between scalability and safety.

Another widely-used technique to improve transaction speed is hypernode. EOS is a great example. It uses a 21-hypernode environment, which makes it more efficient and improves the transaction speed. However, the trade-off is less decentralization — EOS’s decentralization is questioned given that the top 100 holders own around 75 percent of the tokens.

Although EOS achieves decentralization to a certain degree because the hypernodes are randomly selected, EOS chooses efficiency over decentralization — the fundamental principle of the blockchain.

Generally speaking, 80 percent of the current public chain projects set sights on improving the blockchain’s transaction speed, but often overlook the blockchain’s inner function as a computing unit.

In other words, to extend the application of blockchain to areas beyond finance and logistics, we need a breakthrough in storage and computation.

The third evolution: storage and computation DxChain

Some visionaries who want to unleash the full potential of the public chain have dived into the infrastructure of blockchain technology to enable storage and computation.

When it comes to storage application of blockchain, IPFS is the first thing that occurs to me. It is a protocol that allows the use of content addressing and digital signatures to create decentralized and distributed applications.

Its creator, Juan Benet who is also the founder and CEO of Protocol Labs, has an ambitious plan of building an indefinite storage space. He has a grand vision for it, just like how he calls IPFS, an acronym for “InterPlanetary File System.”

The blockchain technology spawns a new economic model — it records people who provide computational resources. Owners of unused hard disks will be willing to join this economic model and contribute their resources.

Speaking of blockchain computers, Dfinity, a decentralized cloud, should stay at the top of the list. Especially when Ethereum is challenged to deliver sufficient computing power, Dfinity which dedicates to leaping over the barrier has attracted public attention.

Dfinity creates a decentralized network whose protocols can generate a reliable “virtual computer” running on top of a peer-to-peer upon which software can install and operate in the tamper-proof mode of smart contracts. The ultimate goal of Dfinity is enabling decentralized public networks to host a virtual computer of unlimited capacity.

Public chain projects like Filecoin and Dfinity enhance blockchain’s capability and extend blockchain’s potential uses.

However, storage and computation can be hardly separated. For example, the centralized cloud giant Amazon Web Services (AWS) provides services of both storage and high-performance computing. Many emerging and ambitious projects are eyeing both, such as DxChain.

In terms of storage, DxChain refers to P2P storage networks such as IPFs. DxChain split an uploaded file into small pieces, randomly distribute them on different mining machine, and store them in respective blockchains. DxChain utilizes the unused hard disk resources with a distributed file system. The data in the blockchain can ensure that the economic model works out. Owners of unused hard disks will be willing to join this economic model and contribute their resources.

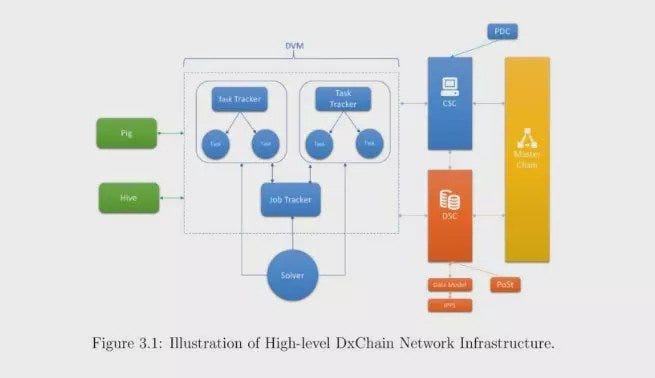

Meanwhile, DxChain believes that it is challenging to meet the data requirements of storage, computation, and privacy simultaneously by just relying on one master chain.

Therefore, DxChain designed a multi-chain architecture similar to Lightning Network, called “chains-on-chain” architecture. It includes one master chain and two sides chains for data storage and computation. The master chain is only responsible for recording events ( e.g., transactions ), thus improving the overall network performance to support large-scale data storage and high-speed computation.

The computation chain is used for parallel computing applications to process large volumes of data, which eventually powers machine learning and business intelligence.

Compared to other computation-related projects, DxChain is exploring a new direction. For example, Dfinity is a cloud computing platform in the blockchain, but it cannot solve the problem of “where the platform’s data comes from.” DxChain is not just providing a storage solution but also intends to solve the problem of “where the platform’s data comes from.” DxChain aims to perform fine-grained operations on stored data. This type of fine-grained data storage and computation will enable a new business model.

For example, let’s say a researcher hopes to collect data of “a male, under 35 years old, resides in California”. This kind of data transaction can only occur when a platform has data and can perform fine-grained operations on it. DxChain can enable data to be traded and circulated by protecting its sensitive information.

Blockchain offers a fair value trading model for internet information. Moreover, it happens to be the direction in which DxChain is putting efforts.

Besides, DxChain also refers to Hadoop, the best-distributed architecture in a centralized storage system. Hadoop solves the problem of distributed data storage within the same organization and company. However, blockchain is a more appropriate technology to reach trust between different organizations and participants and realize multi-central distributed storage.

DxChain is combining Hadoop’s advantages and the unique mechanism of blockchain to solve the problem of distributed storage and computation in a decentralized environment.

The public chain keeps growing. Developers are pushing the limits of blockchain by coming up with more technological breakthroughs, which will eventually increase the overall maturity of the blockchain. The breakthrough in transaction speed encourages the public to keep eyes on storage and computation, while the improvement of storage and computation cannot live without the optimization of the transaction, eventually bringing the whole infrastructure to perfection.